INTRODUCTION

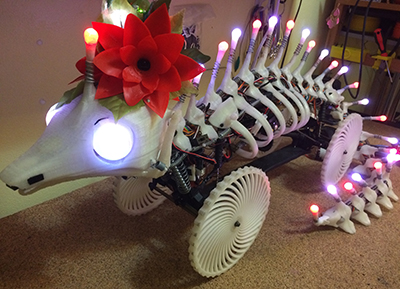

Fatuous Robotic Experiment in Draconiform Ambulation

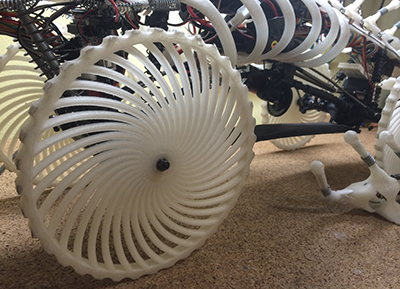

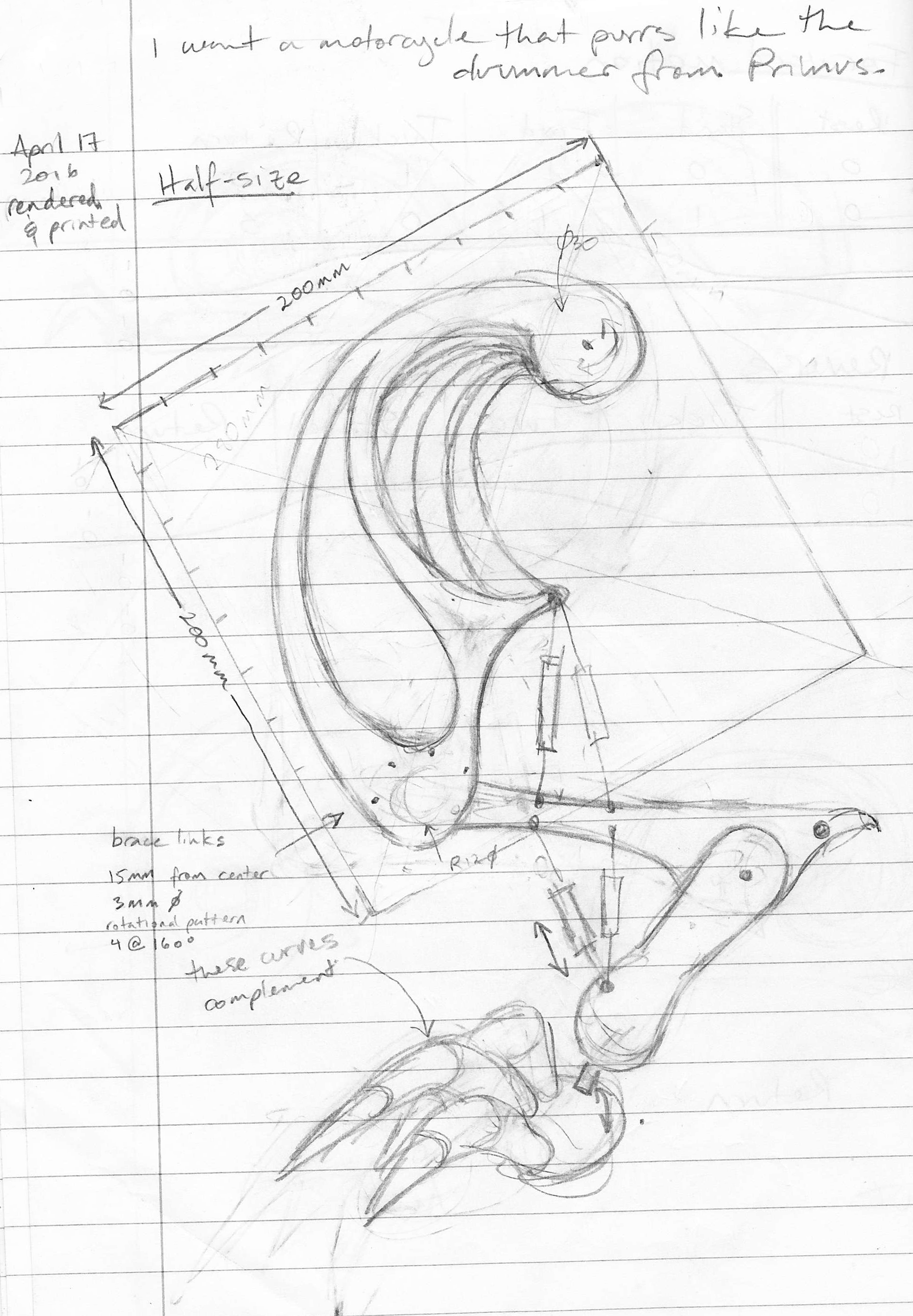

FREDA was chosen as a long-term project that would require skills far beyond my current capabilities, in order to help direct my focus in learning robotics. I wanted to start from the ground up, so I could understand each element along the way. I also wanted to make something weird, just for the fun of it.

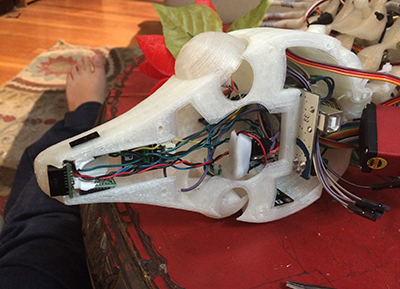

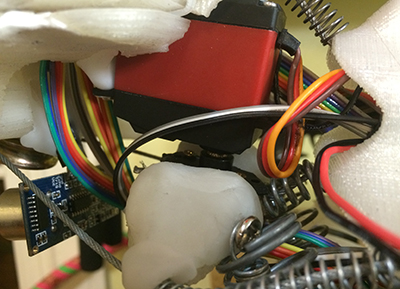

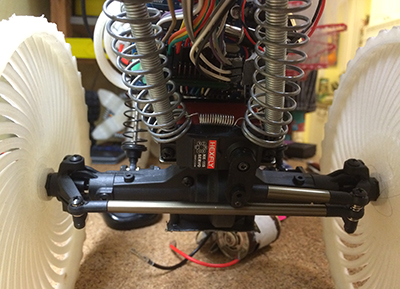

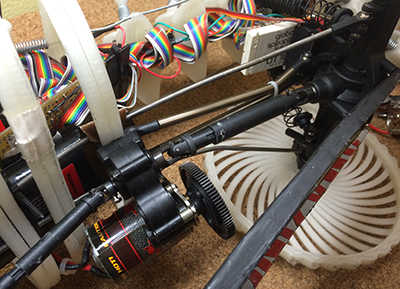

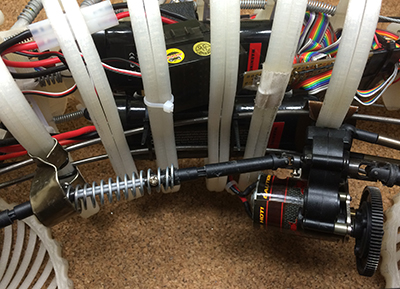

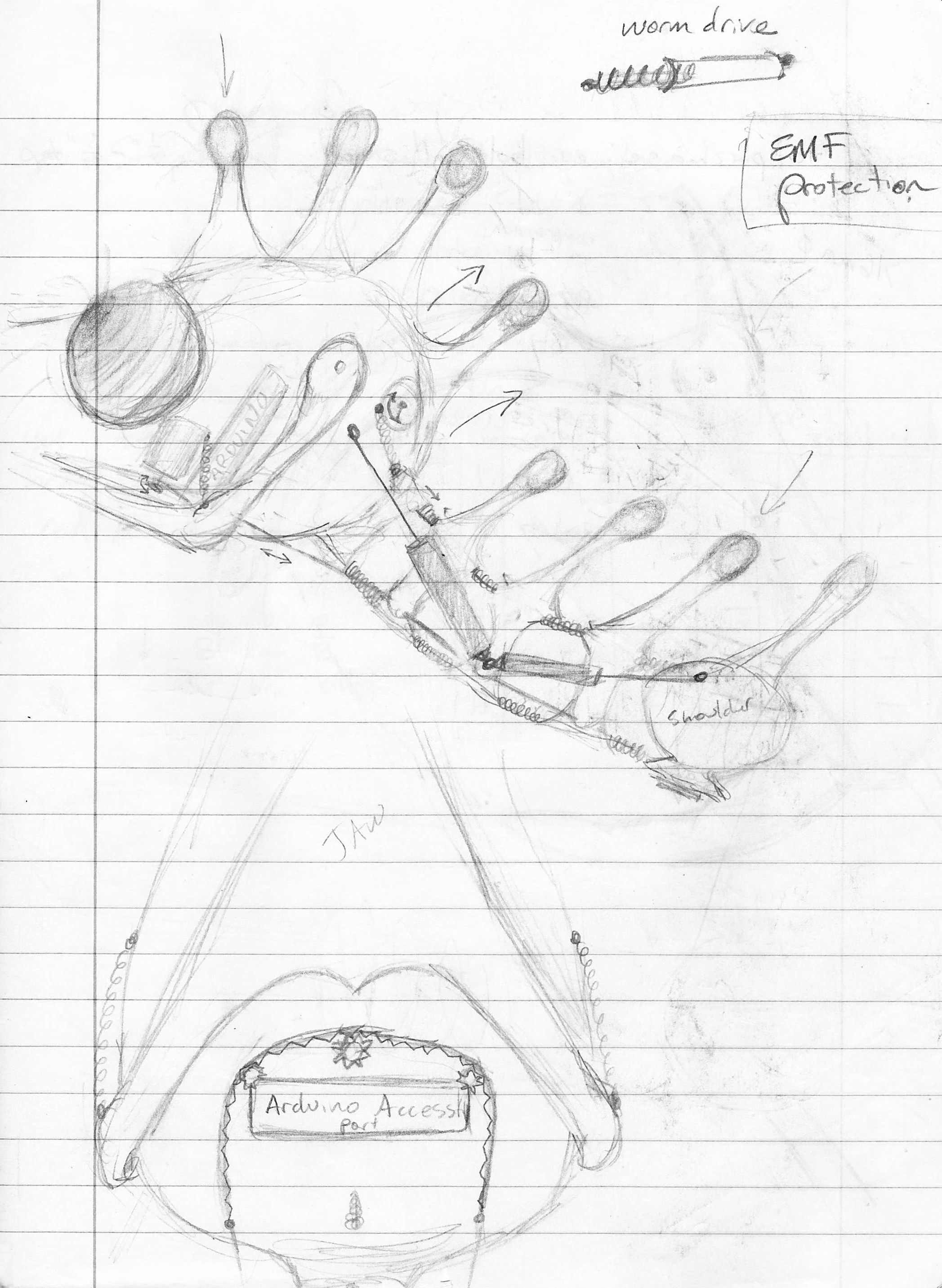

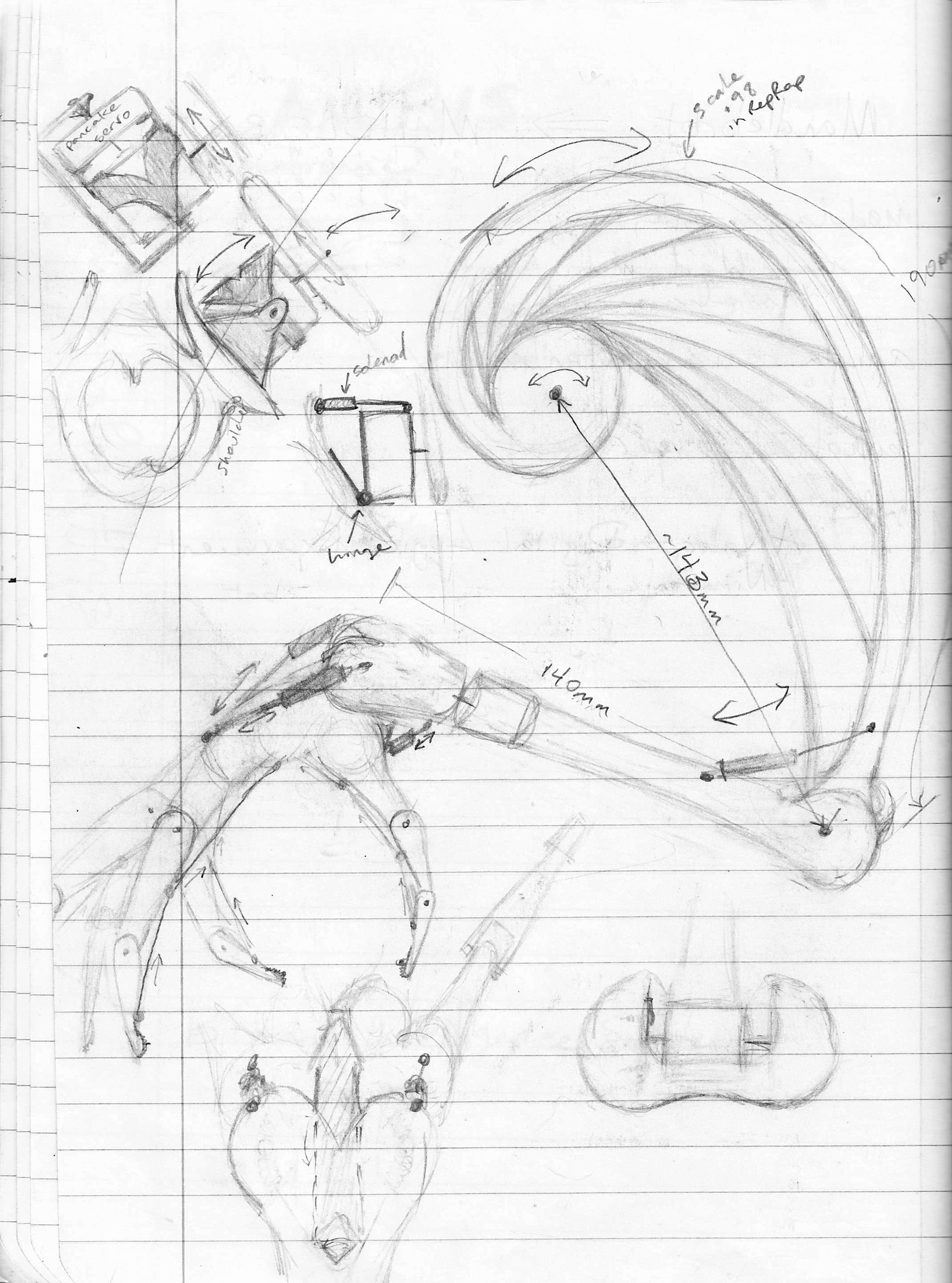

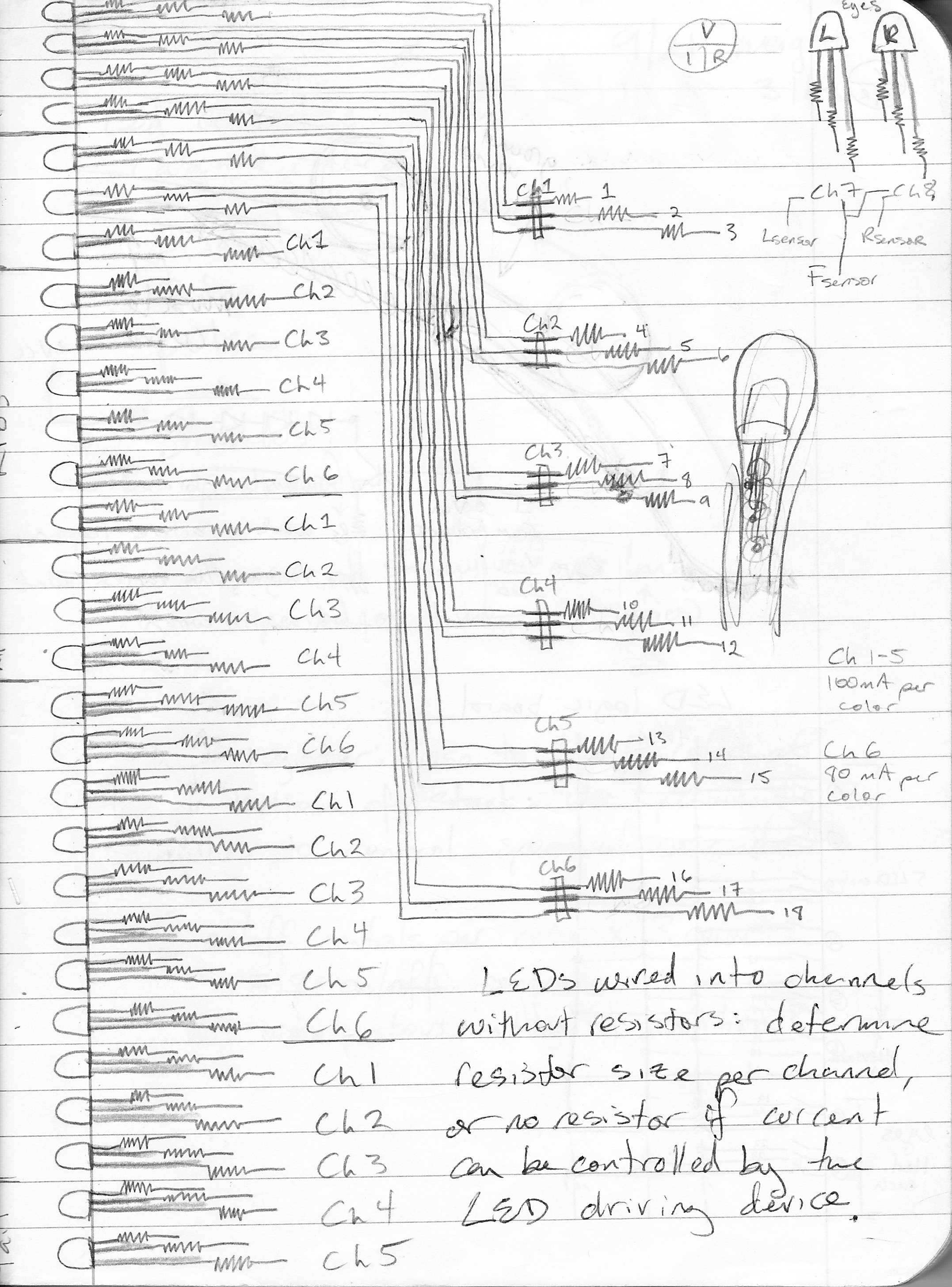

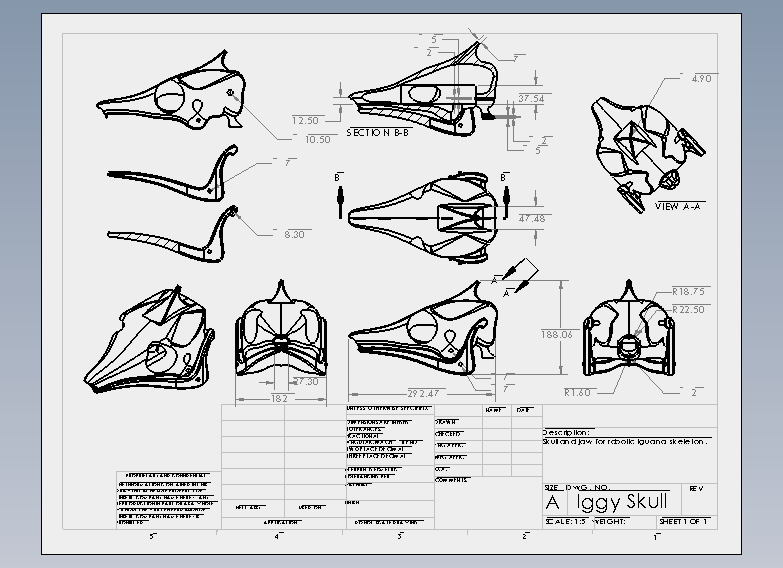

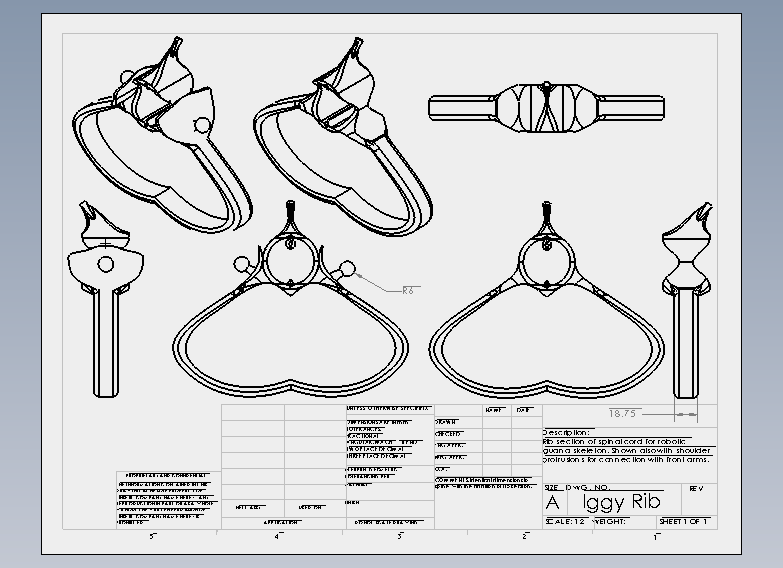

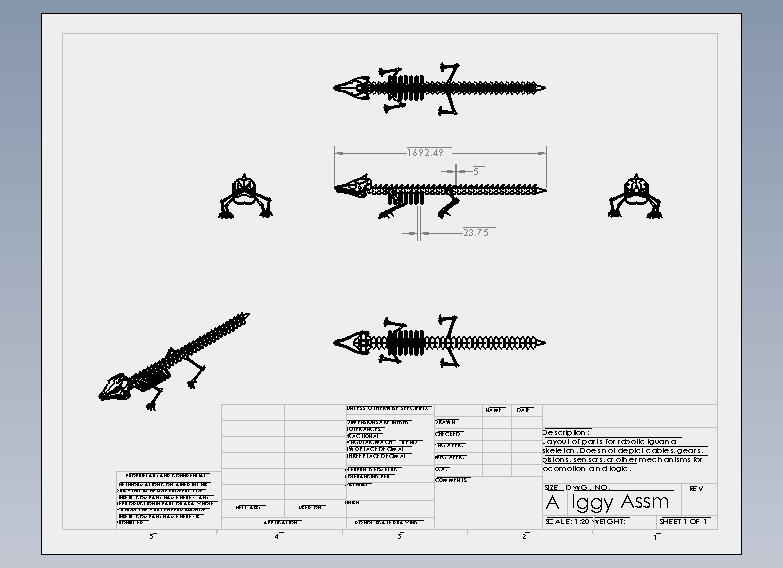

To get started on this project, I first had to learn Solidworks, in order to begin designing the chassis itself. I designed most of the chassis while taking a class at City College of San Francisco. I also took some basic electronics courses, and built a Velleman K8200 home 3D printer from a kit, which allowed me to practice electronics assembly with instruction before I attempted any from scratch. After printing the chassis, I wired up the 31 RGB LEDs housed along the spine and in each eye, as that was the accumulation of my ability at the time. After that, I enrolled in a beginning coding class and an Intro to Robotics course at UC Berkeley. What you are seeing on this site is the accumulation of everything up until the completion of these two courses.

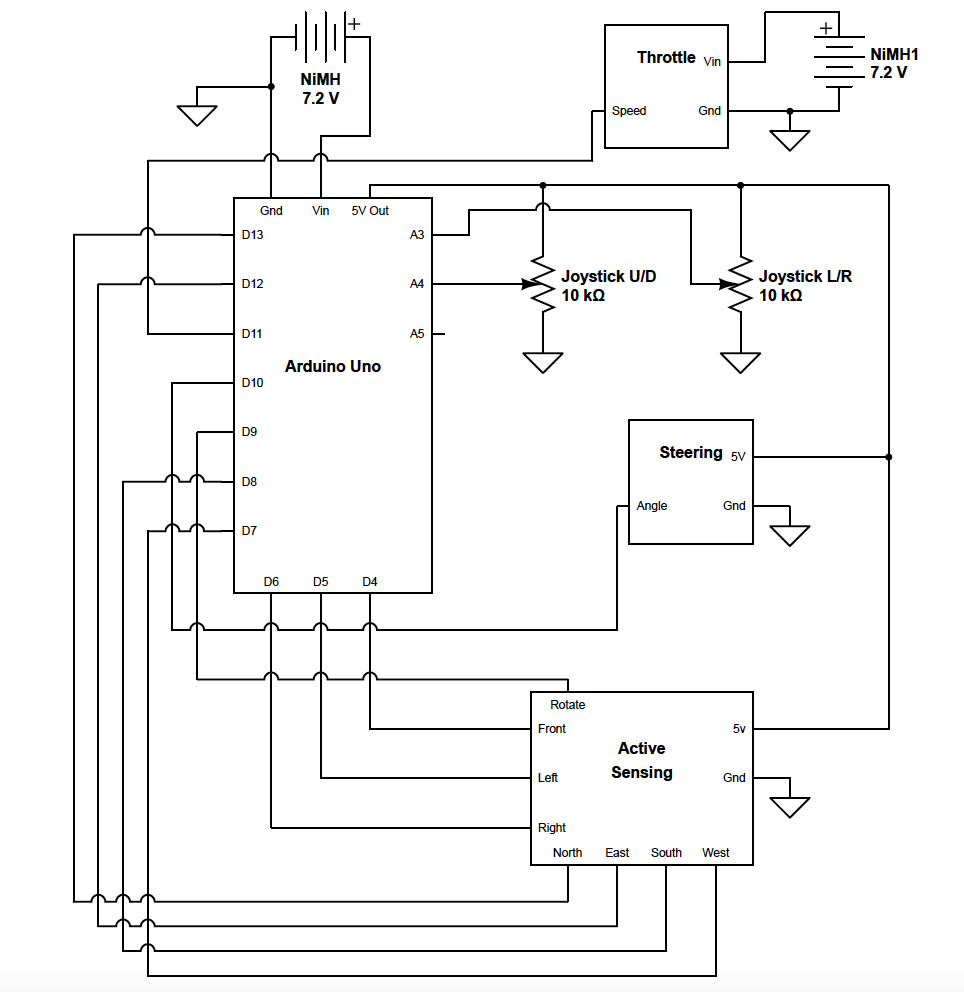

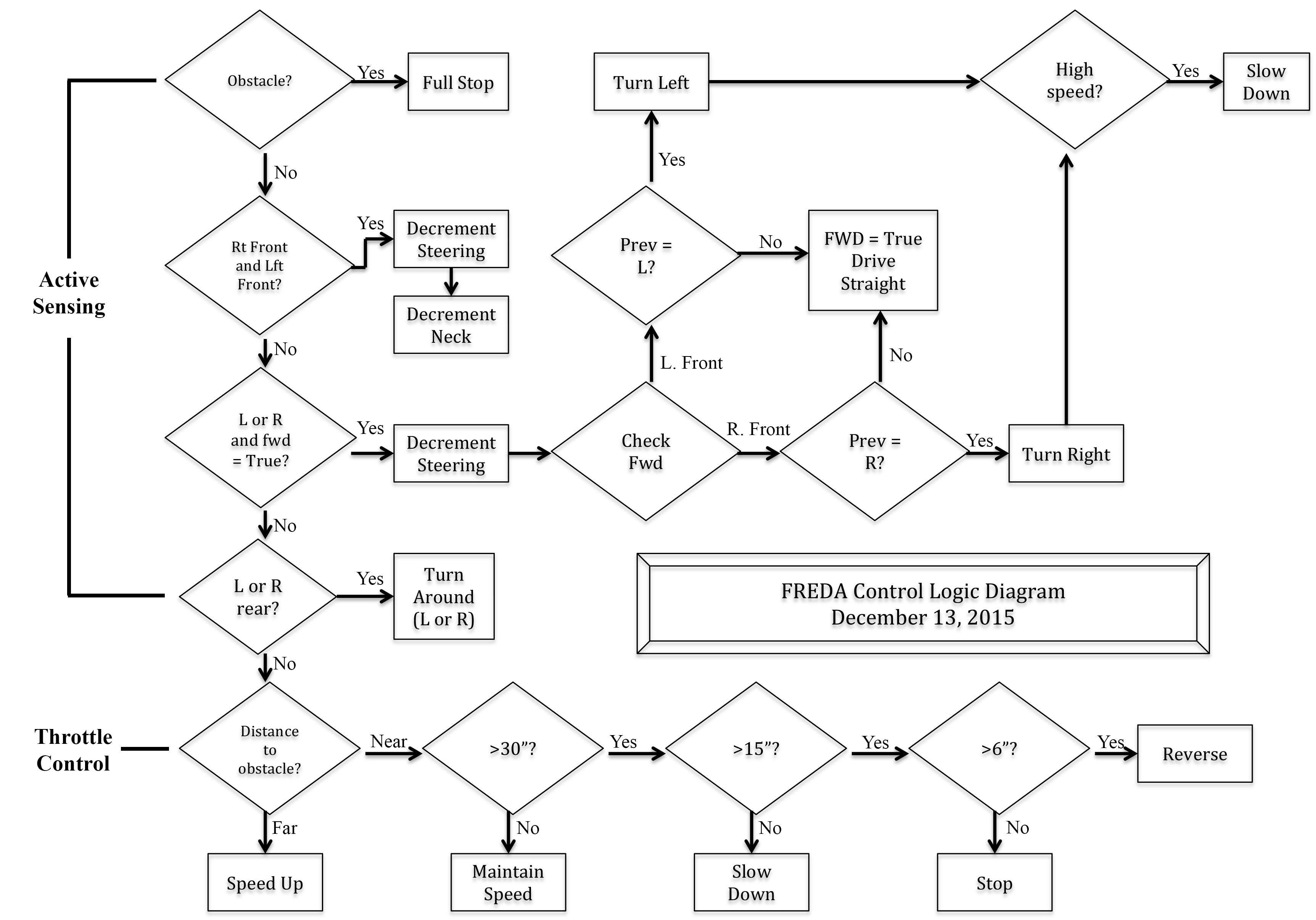

For my robotics final project, the goal was to get FREDA to follow me without crashing into anything. I decided to use a Pololu 2-part IR beacon, one of which I would keep on my person, and the other would be attached to FREDA’s head to enable active sensing and inform her path-planning algorithm. I would also use distance sensors about her nose to allow for obstacle avoidance. The major hurdle I have been encountering is discovering how best to code the Arduino controlling everything to allow for appropriately timed responses to sensor input. In other words, there’s a lot of trial and error, and she’s crashed a lot in the meantime.

Robot navigation is, in my opinion, one of the most important aspects of human-robot interaction, and is extremely relevant to the ever-expanding field of robotics. This particular project could lend itself specifically to fun toys, or even perhaps a robotic assistant. The much broader topic of navigating through an environment safely and effectively is already being widely explored by a number of researchers in the field, and lends itself to any environment in which a robot works in tandem with a human and safety is a concern.